By John Ragsdale, SVP Marketing, Kahuna Labs

A customer reports intermittent failures. There’s a vague error string. A screenshot. A “started happening last week.” Your team replies quickly, asks for logs, shares a few standard steps. The customer responds… slowly. They’re busy. They’re not sure where to find the right file. The thread stretches into a day, then two.

Meanwhile, the engineer assigned to the case is doing what good engineers do: trying to reconstruct context from fragments. Skimming old cases. Checking release notes. Asking a senior teammate, “Have you seen this before?” The case isn’t “hard” yet—but it’s already drifting. Progress is measured in messages, not evidence.

Then the calendar pressure hits. A renewal is near. A launch is blocked. Someone senior gets looped in on the customer side. And suddenly the temperature changes: “Can you escalate this?” Not because the issue is impossible–a code bug is indicated–but because confidence is gone. The customer doesn’t feel momentum. Your team doesn’t feel leverage. Everyone is operating with partial information and rising stakes.

This is what makes escalations so maddening: they often feel like a moment, but they’re really a trajectory—set in motion early, by missed signals that were present long before the escalation email arrived.

This is where predictive AI becomes less about “responding faster” and more about preventing the conditions that create escalations in the first place.

The Real Reasons Escalations Happen (Beyond “It’s A Hard Issue”)

In complex product support, escalations tend to come from a few recurring root causes:

1) The first few steps are wrong—or delayed

Most escalations start quietly. The initial triage misses a key diagnostic. The first response is generic. The engineer spends hours recreating context. Customers don’t escalate because the issue is complex—they escalate because they feel uncertainty and slow progress.

2) Tribal knowledge is inaccessible when it matters

Your best engineers carry “pattern memory” in their heads: which symptoms imply which root causes, what to ask next, what diagnostic will quickly collapse the search space. When that knowledge stays trapped in people—or buried in raw tickets—other engineers take longer, take more loops, and escalations happen more often.

3) Customer reality is unique, and documentation can’t keep up

Even strong knowledge bases cover only a fraction of real-world scenarios because customer environments vary significantly (configs, integrations, versions, constraints). Escalations spike when the support model assumes “one canonical flow” but reality is “a thousand variations.”

4) Support is operating without a map

Many teams are effectively navigating a maze without a current floor plan: fragmented tools, inconsistent ticket narratives, missing context, and no shared visibility into how problems typically evolve from first symptom to resolution.

Signals That Predict Escalation (Often Hours or Days Earlier)

Escalation risk usually telegraphs itself through patterns like:

- Stalled progression: multiple back-and-forth cycles without net-new evidence (no new diagnostics, no narrowing hypotheses).

- High “research load”: long time spent gathering context, searching past tickets, or asking internal SMEs what to do next.

- Mismatch of actions: customer-doable steps sent as engineer-only tasks (or vice versa), creating delays and frustration.

- Low-quality precedent: the “similar past tickets” exist, but they’re noisy, incomplete, or not aligned to the current stage of troubleshooting.

- Version/config sensitivity: the same symptom behaves differently across versions or specific configurations—so generic “best practices” fail.

Individually, these signals feel like normal variance. Together, they’re a pattern: this case is drifting toward escalation.

How Predictive AI Prevents Escalations: From “Ticket Handling” to “Path Guidance”

The most impactful shift is moving from AI that answers questions to AI that understands the troubleshooting journey.

At Kahuna, the foundation is a Troubleshooting Map™ built from historical ticket journeys—where tickets are reconstructed into step-by-step “snapshots” and clustered into repeatable paths. That means the AI can recognize not only “what this issue is,” but what stage you’re in and what paths usually succeed from here.

Three preventative strategies become possible:

1) Predict escalation risk by detecting “drift” early

When a case starts to diverge from successful historical paths—too many loops, missing diagnostics, delayed next steps—predictive alerts can trigger intervention before the customer forces it. (This is very different from simply routing “angry customers” faster.)

2) Recommend the next best step with confidence, not guesswork

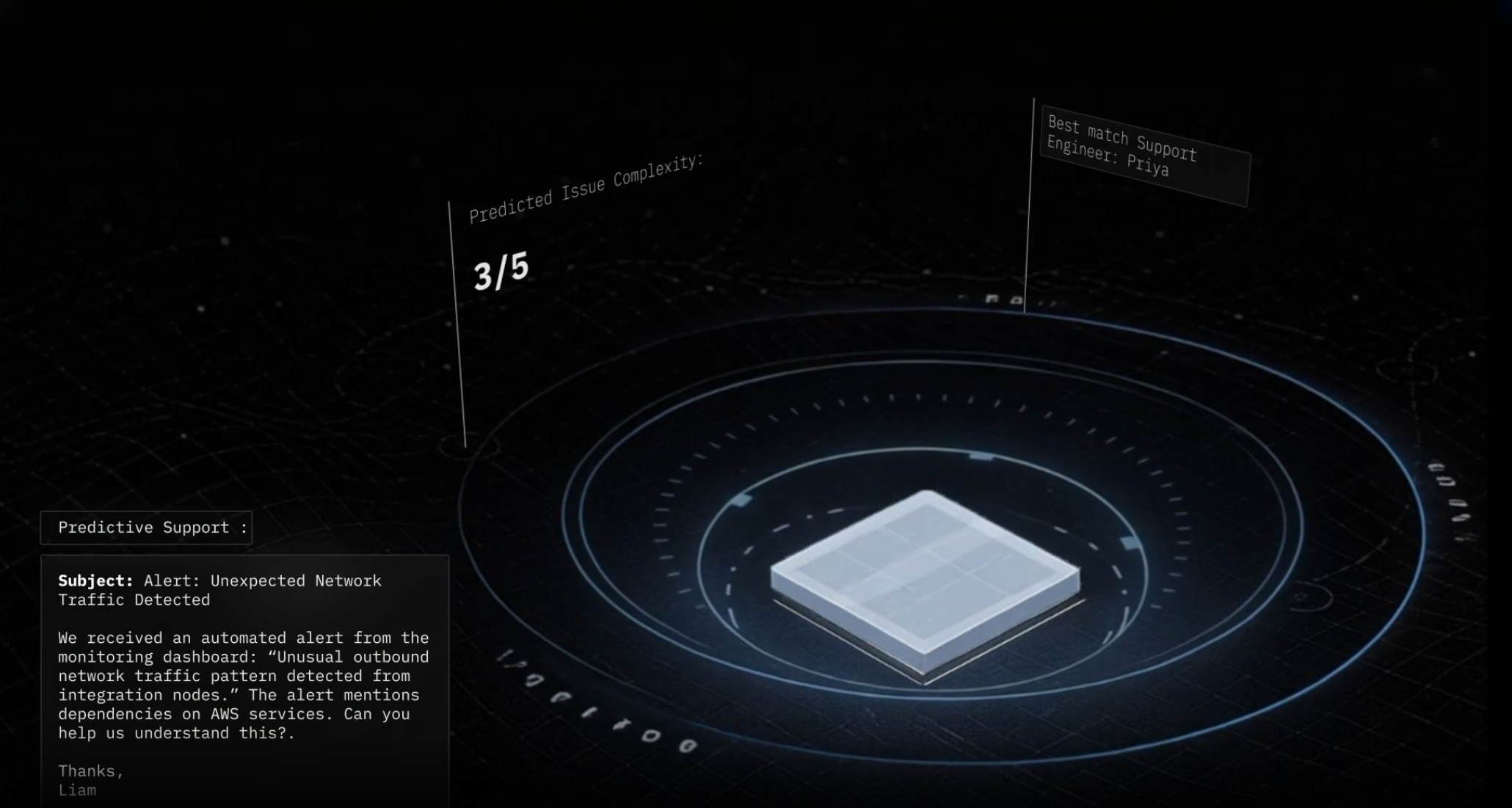

Not all guidance deserves automation. Kahuna-style approaches use scoring—Credibility Score™, Completeness Score™, and a Complexity Score™ for recommended paths—so engineers can see when the system is drawing from dense, high-quality precedent versus thin, ambiguous signals.

3) Prevent escalation by removing effort, not adding process

When confidence is high, preventative automation can do the work that typically causes delays: auto-collect diagnostics, propose probing questions, and standardize decision flows—so the case moves forward with momentum and clarity.

The Preventative Mindset Shift

Escalation prevention isn’t a “new policy.” It’s a capability. The support models that win in the next era will act less like reactive firefighters and more like orchestrators—using AI to make the invisible visible: the patterns, the paths, the signals, and the next steps that keep cases from ever becoming escalations.

The goal isn’t to eliminate every escalation. It’s to ensure escalations happen for the right reasons—true novelty and exceptions—not because the system couldn’t see what was coming.

Escalations have a strong correlation to customer satisfaction with support, and high rates of escalation can impact likelihood of renewal. Leveraging AI to prevent escalations from happening will eliminate a lot of friction in the customer experience, and enable support to be seen as a relationship builder, not an element that drives difficult conversations (and potential cost concessions) come renewal time.